Light

Last year, I took a look at a new depth-perceiving sensor system called Clarity from a company called Light. Originally developed for smartphone applications, Light pivoted a couple of years ago to develop its technology for automotive applications like advanced driver assistance systems (ADAS) and autonomous driving.

A lengthy comment thread followed, with plenty of questions about how Light’s technology works. The people at Light read the entire thread, then spoke with me to answer your questions.

The Ars commentariat’s questions fell into four themes: whether or not Clarity can work in low-light situations; the similarities to human vision and parallax; Clarity’s accuracy and reliability compared to other sensor modalities like lidar; and whether it’s similar to Tesla’s vision-only approach.

Headlights are required to drive at night

With regard to how Clarity performs at night and in low-light situations, the answer is pretty simple: We are required to drive with headlights on at night. “Most of the infrastructure for anything automotive has the assumption that there’s some exterior lighting, usually lighting on the vehicle,” said Prashant Velagaleti, Light’s chief product officer.

Similarly, there were some questions about how the sensor system handles dirt or occlusion. “One of the benefits of our approach is that we don’t pre-specify the cameras and their locations. Customers get to decide on a per-vehicle basis where they want to place them, and so, you know, many in passenger vehicles will put them behind the windshield,” Velagaleti told me. And of course, if your cameras are behind the windshield, it’s trivial to keep their view unobscured thanks to technology that has existed since 1903 that allows drivers of non-autonomous vehicles to drive in the rain or snow and see where they’re going.

“But when we talk about commercial applications, like a Class 8 truck or even an autonomous shuttle, they have sensor pods, and those sensor pods have whole cleaning mechanisms, some that are quite sophisticated. And that’s exactly the purpose—to keep that thing operational as much as possible, right? It’s not just about safety, it’s about uptime. And so if you can add some cleaning system that keeps the vehicle moving on the road at all times and you saved net-net, it’s advantageous. You’ve saved money,” Velagaleti said.

“Everyone just assumes the end state’s the first state, right? And we think those of us who are really tackling this from a pragmatic standpoint, it’s crawl, walk, run right here. Why shouldn’t people benefit from safety systems that are L2+ with what light clarity can offer by adding one more camera module; suddenly your car is much safer. We don’t have to wait till we get to all four for people to benefit from some of these technologies today,” Velagaleti told me.

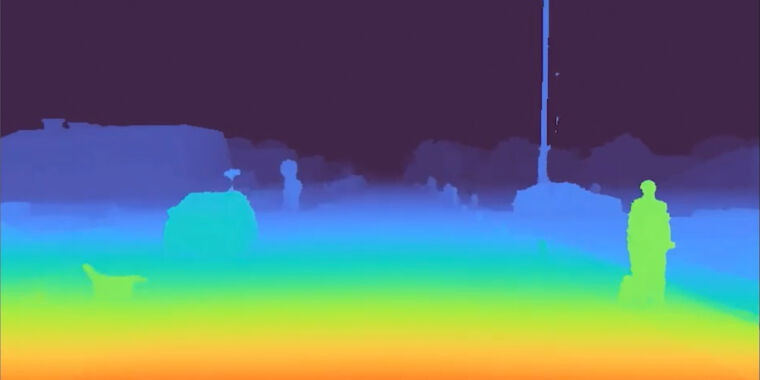

-

This is a frame captured by Clarity at about 60 mph in low light.

Light -

This is the matching frame showing the lidar point returns for the same piece of road. As you can see, the lidar resolution is a fraction of Clarity’s.

Light -

This is the reference image, and can you spot the deer by the side of the road?

Light

How does it compare to Tesla?

“When it comes to Tesla and Mobileye, for example, you know, both of those are machine learning-based systems. So as we like to say, you’ve got to know what something is in the world before you know where it is, right? And if you can’t figure out what it is, you fail,” said Dave Grannan, Light’s co-founder and CEO.

Unlike an ML-based approach, Clarity doesn’t care if a pixel belongs to a car or the road or a tree—that kind of perception happens further on in the stack. “We just look at pixels, and if two cameras can see the same object, we can measure it. That’s essentially a tagline without knowing what the object is. Later on, down the stack and perception layer, you want to then use both the image data and the depth data to better ascertain what is the object and is it necessary for me to alter my decision,” explained Boris Adjoin, senior director of technical product management at Light.

And no, that should not be interpreted as Light saying that ML is a waste of time. “Machine learning is an awesome breakthrough. If you can feed machine learning with this kind of sensor data, per frame, without any assumptions, that’s when real breakthroughs start to happen, because you have scale for every structure in the world. That is not something really any machine-learning model today that is in the field has the benefit of. Maybe it’s trained on 3D data, but it typically doesn’t get very much 3D data, because as you’ve seen with lidars, they’re accurate but sparse, and they don’t see very far away,” Velagaleti noted.

Meanwhile, Tesla’s system uses a single camera. “Tesla claims a billion miles of driving, and they still have these errors that we see very frequently with the latest release of FSD. Well, it’s important because you’re asking way too much of ML to have to derive things like depth and structures of the world, and it’s just, it’s a little opposite. It’s backward. And again, I think for every reason, it made a lot of sense for people to get something to market that does something.

“But if we really want the next change to happen, you can either believe that maybe a lidar will come to market that will be providing the kind of density you see here at a price point that everyone can afford, that’s robust in automotive environments, that’s manufacturable like in volume, or we can add another camera and add some signal processing and do it quickly. We can’t just keep asking a single camera with inferencing or structure from motion or some other technique like this to deal with a very complex world. And in a complex application space—I mean driving is not easy; we don’t let a 4-year-old do driving” Velagaleti said.

“I think Tesla has done a good job of highlighting how sophisticated a training system they have, you know, and it’s very impressive. I don’t think we’re here to critique Tesla. They made it their own chip, which is in and of itself, having done that before, that is non-trivial. So there’s a lot that is very impressive in Tesla’s approach. I think people then unfortunately assume that a Tesla is doing certain things that Tesla is not saying, so Tesla’s not doing stereo,” Velagaleti explained.

What about Subaru’s EyeSight stereo vision?

Grannan pointed out that the principles of stereo vision have been well-understood for quite a long time. He admitted that Light has not done as good a job as it could have in explaining how its system differs from Subaru’s EyeSight camera-only ADAS, which uses a pair of cameras mounted in a unit that lives behind the rear-view mirror at the top of the windshield.

“Really, what we’ve solved comes down to two things. The ability to handle these wide baselines of cameras far apart because when your cameras are far apart, you can see farther—that’s just physics. In Subaru EyeSight, they have to keep the cameras close together because they haven’t figured out how to keep them calibrated. That becomes a very hard problem when they’re far apart and not on the same piece of metal. That’s one. The other thing we’ve done is, most stereo systems are very good at edge detection, seeing the silhouette of the car of the person of the bicycle, and then just assuming the depth is the same throughout, right? So it’s called regularization or infill. We developed signal-processing algorithms that allow us to get depth for every pixel in the frame. It’s now much richer detail,” Grannan explained.

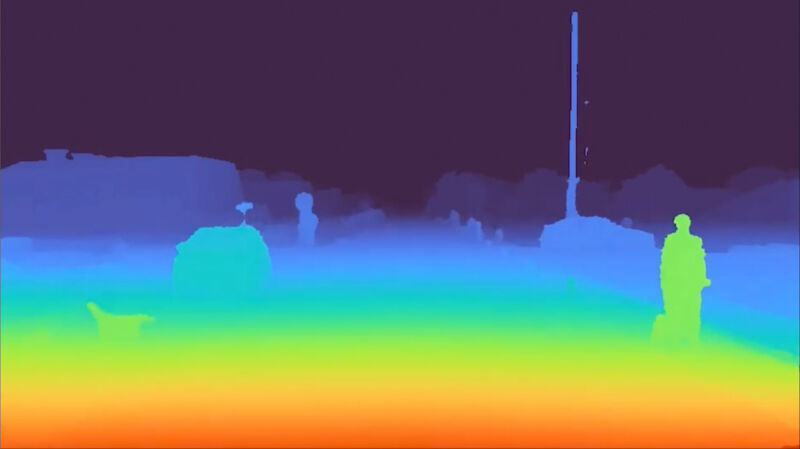

-

This frame was captured on a very rainy day.

Light -

And this is the corresponding lidar frame for the same scene. Notice how much detail is missing.

LIght -

Here’s the reference image for the Clarity and lidar images. You can see that the lidar completely missed the SUV to the left of the frame.

Light

“I believe we’re really the first robust implementation in stereo,” said Velagaleti. “What you will find across the board, Continental, Hitachi—I’m not gonna be overly specific about anyone provider’s technology—you will see that, there, they separate their cameras by only about 40 centimeters. And the reason they do that is that’s about as large of an array they can support. They have to build it very rigid in order for it to work,” Velagaleti explained.

“And if you think about it, the problem becomes exponentially harder when you go farther apart, as Dave said, because what is the size of a pixel and a camera module today? It’s about three microns. It’s very small, right? Now we’re seeing objects very far away. So if you put cameras far apart, the intent is you’re trying to accurately see something far away, which matters in most applications. But now if you’re off by a few pixels, which means you’re off by just a few microns, you’re not going to get accurate depth,” Velagaleti said.

“So what Light has solved, which is, this gets to the robustness of the thing, is we have been able to solve for every frame, we figure out where the cameras really are, how the images relate to each other, and then we derive depth very accurately. So basically, we’re robust, right? And this is how you can literally put two independent cameras without anything rigid between them. And we’re still working at a sub-pixel level, which means we’re sub-micron in terms of how we’re figuring out where things are in the world. And that has just never been done before,” Velagaleti continued.

That calibration process is apparently simple to perform in the factory, but the exact details of how Light does that is a trade secret. “But by virtue of being able to solve our calibration, that gives us robustness and it gives us flexibility. So that’s how I can tell you for any customer who comes to us, OEM or Tier One [supplier], they get to decide where they want to place their cameras or how many cameras they want to put and what kind of cameras they want to use. That’s because we solve for calibration,” Velagaleti said.

“The other key thing that I want to highlight that’s very different versus others—we don’t make assumptions. So what Dave said about edge detection and infill, right, basically most stereo systems today, they measure a certain portion of what they see. And then they basically guesstimate everything in between. Because they can’t actually do what we’re able to do, which is essentially measure every pixel we’re getting and derive depth for it,” Velagaleti told me.