Benj Edwards / Ars Technica

Last week, Swiss software engineer Matthias Bühlmann discovered that the popular image synthesis model Stable Diffusion could compress existing bitmapped images with fewer visual artifacts than JPEG or WebP at high compression ratios, though there are significant caveats.

Stable Diffusion is an AI image synthesis model that typically generates images based on text descriptions (called “prompts”). The AI model learned this ability by studying millions of images pulled from the Internet. During the training process, the model makes statistical associations between images and related words, making a much smaller representation of key information about each image and storing them as “weights,” which are mathematical values that represent what the AI image model knows, so to speak.

When Stable Diffusion analyzes and “compresses” images into weight form, they reside in what researchers call “latent space,” which is a way of saying that they exist as a sort of fuzzy potential that can be realized into images once they’re decoded. With Stable Diffusion 1.4, the weights file is roughly 4GB, but it represents knowledge about hundreds of millions of images.

While most people use Stable Diffusion with text prompts, Bühlmann cut out the text encoder and instead forced his images through Stable Diffusion’s image encoder process, which takes a low-precision 512×512 image and turns it into a higher-precision 64×64 latent space representation. At this point, the image exists at a much smaller data size than the original, but it can still be expanded (decoded) back into a 512×512 image with fairly good results.

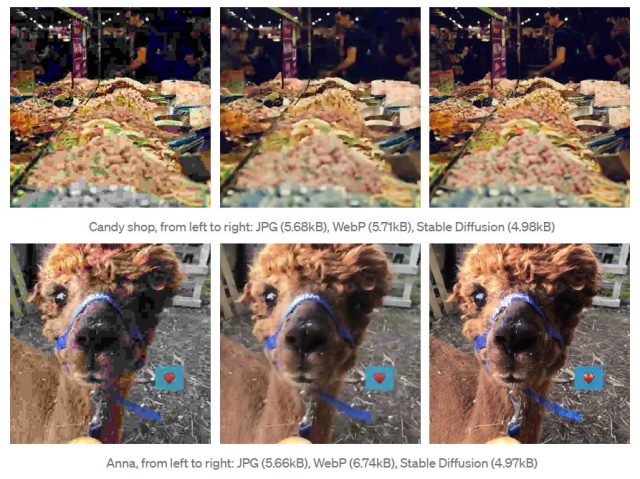

While running tests, Bühlmann found that images compressed with Stable Diffusion looked subjectively better at higher compression ratios (smaller file size) than JPEG or WebP. In one example, he shows a photo of a candy shop that is compressed down to 5.68KB using JPEG, 5.71KB using WebP, and 4.98KB using Stable Diffusion. The Stable Diffusion image appears to have more resolved details and fewer obvious compression artifacts than those compressed in the other formats.

Bühlmann’s method currently comes with significant limitations, however: It’s not good with faces or text, and in some cases, it can actually hallucinate detailed features in the decoded image that were not present in the source image. (You probably don’t want your image compressor inventing details in an image that don’t exist.) Also, decoding requires the 4GB Stable Diffusion weights file and extra decoding time.

While this use of Stable Diffusion is unconventional and more of a fun hack than a practical solution, it could potentially point to a novel future use of image synthesis models. Bühlmann’s code can be found on Google Colab, and you’ll find more technical details about his experiment in his post on Towards AI.