Aurich Lawson | Getty Images

“Artificial Intelligence” as we know it today is, at best, a misnomer. AI is in no way intelligent, but it is artificial. It remains one of the hottest topics in industry and is enjoying a renewed interest in academia. This isn’t new—the world has been through a series of AI peaks and valleys over the past 50 years. But what makes the current flurry of AI successes different is that modern computing hardware is finally powerful enough to fully implement some wild ideas that have been hanging around for a long time.

Back in the 1950s, in the earliest days of what we now call artificial intelligence, there was a debate over what to name the field. Herbert Simon, co-developer of both the logic theory machine and the General Problem Solver, argued that the field should have the much more anodyne name of “complex information processing.” This certainly doesn’t inspire the awe that “artificial intelligence” does, nor does it convey the idea that machines can think like humans.

However, “complex information processing” is a much better description of what artificial intelligence actually is: parsing complicated data sets and attempting to make inferences from the pile. Some modern examples of AI include speech recognition (in the form of virtual assistants like Siri or Alexa) and systems that determine what’s in a photograph or recommend what to buy or watch next. None of these examples are comparable to human intelligence, but they show we can do remarkable things with enough information processing.

Whether we refer to this field as “complex information processing” or “artificial intelligence” (or the more ominously Skynet-sounding “machine learning”) is irrelevant. Immense amounts of work and human ingenuity have gone into building some absolutely incredible applications. As an example, look at GPT-3, a deep learning model for natural languages that can generate text that is indistinguishable from text written by a person (yet can also go hilariously wrong). It’s backed by a neural network model that uses more than 170 billion parameters to model human language.

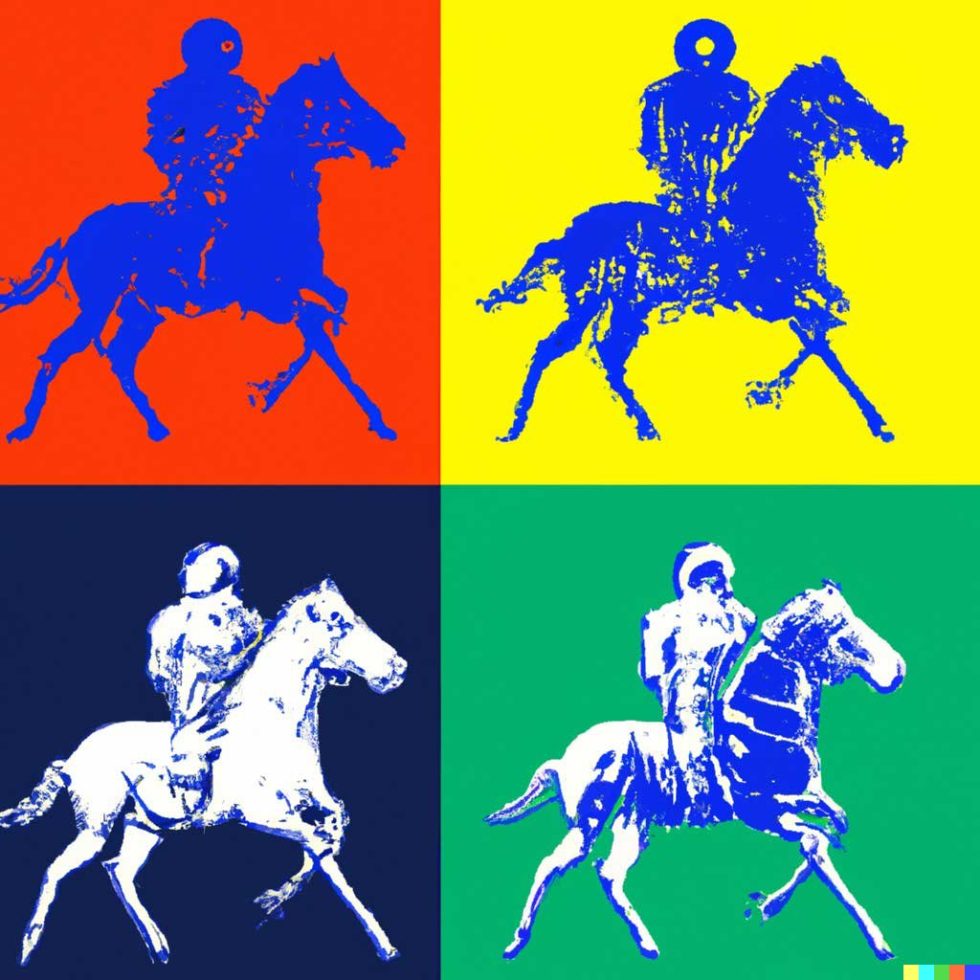

Built on top of GPT-3 is the tool named Dall-E, which will produce an image of any fantastical thing a user requests. The updated 2022 version of the tool, Dall-E 2, lets you go even further, as it can “understand” styles and concepts that are quite abstract. For instance, asking Dall-E to visualize “An astronaut riding a horse in the style of Andy Warhol” will produce a number of images such as this:

Dall-E 2 does not perform a Google search to find a similar image; it creates a picture based on its internal model. This is a new image built from nothing but math.

Not all applications of AI are as groundbreaking as these. AI and machine learning are finding uses in nearly every industry. Machine learning is quickly becoming a must-have in many industries, powering everything from recommendation engines in the retail sector to pipeline safety in the oil and gas industry and diagnosis and patient privacy in the healthcare industry. Not every company has the resources to create tools like Dall-E from scratch, so there’s a lot of demand for affordable, attainable toolsets. The challenge of filling that demand has parallels to the early days of business computing, when computers and computer programs were quickly becoming the technology businesses needed. While not everyone needs to develop the next programming language or operating system, many companies want to leverage the power of these new fields of study, and they need similar tools to help them.